IFPEN, together with Friedrich-Alexander-Universität, has extended the widely applicable Lattice-Boltzmann (LBM) framework waLBerla to the simulation of entire wind farms using a Large-Eddy Simulation framework.

IFPEN, together with Friedrich-Alexander-Universität, has extended the widely applicable Lattice-Boltzmann (LBM) framework waLBerla to the simulation of entire wind farms using a Large-Eddy Simulation framework.

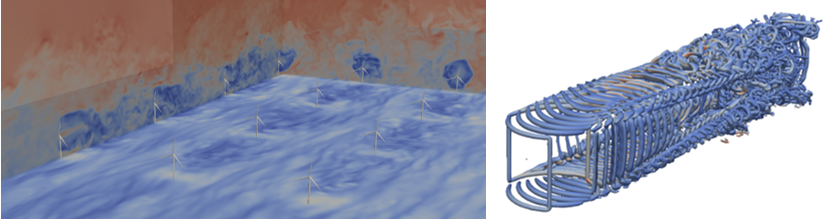

Using actuator-line models for the wind turbines, this framework allows unpreceded computational times thanks to the LBM and its excellent scalability on graphical processing units (GPUs). Advanced collision operators, e.g., the cumulant LBM, grant numerical stability, even at high Reynolds numbers.

Using actuator-line models for the wind turbines, this framework allows unpreceded computational times thanks to the LBM and its excellent scalability on graphical processing units (GPUs). Advanced collision operators, e.g., the cumulant LBM, grant numerical stability, even at high Reynolds numbers.

The waLBerla-Wind extension was developed during the EoCoE-II project. It comes with a holistic CPU/GPU implementation that allows a mutual code base for CPU and GPU hardware, making the code easily extensible and maintainable. Static mesh refinement is available on CPUs. Through the versatility of its actuator line implementation, waLBerla-Wind can deal with wind farms, including different wind turbine types, such as horizontal or vertical axis wind turbines.

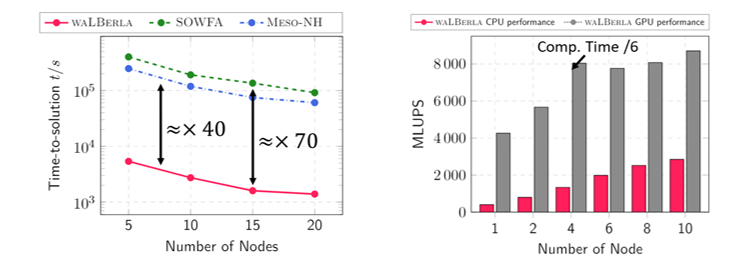

The solver has been validated against wind tunnel experiments (NewMexico measurements) and compared with two Navier-Stokes-based approaches, Meso-NH and SOWFA (OpenFOAM). First scaling studies (Figure 2) show a speed-up of about 40 to 70 compared with Navier-Stokes-based approaches on CPU, and up to 400 when using GPUs. Considering the simulated physical time of 1200s, the simulations perform faster than in real-time when using 5 GPU nodes.

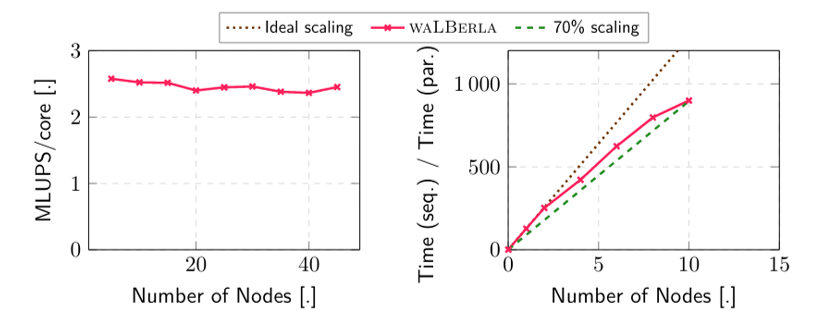

Weak and strong scaling experiments (Figure 3) have been run on the Topaze super-computer, using up to 45 nodes, each node containing 2 AMD Milan@2.45GHz (AVX2) CPUs with 64 cores per CPU. Regarding the GPU runs, each node consists of 4 Nvidia A100 GPUs.

Future work will include:

- Extension of the solver to the simulation of atmospheric boundary layers

- Hybrid CPU/GPU simulation to make use of the whole hardware

- Mesh refinement on the GPUs

- Making the code ExaScale-ready

waLBerla-Wind team: H. Schottenhamml, A. Anciaux-Sedrakian, F. Blondel, U. Rüde

References:

[1] Schottenhamml et al., Evaluation of a lattice Boltzmann-based wind-turbine actuator line model against a Navier-Stokes approach TORQUE 2022 (accepted).

[2] Schottenhamml et al., Actuator-Line simulation of wind turbines using the Lattice Boltzmann Method, Presentation, https://dx.doi.org/10.13140/RG.2.2.10928.89603, 2021.